问题描述

当prover异常,或者某些原因导致各个服务不稳定时,prover_jobs 卡在in_gpu_proof 状态,跳过的证明无法自恢复进行补全。

测试数据

主节点

zksync_local=# select l1_batch_number, status, created_at from prover_jobs order by created_at desc;

l1_batch_number | status | created_at

-----------------+--------------+----------------------------

1147 | successful | 2023-09-06 05:58:18.199706

1146 | successful | 2023-09-06 02:57:31.196466

1145 | successful | 2023-09-06 02:19:07.253418

1144 | successful | 2023-09-06 01:46:06.18188

1143 | in_gpu_proof | 2023-09-05 11:27:41.230814

1142 | in_gpu_proof | 2023-09-05 11:27:37.413038

1141 | in_gpu_proof | 2023-09-05 11:27:31.75103

1140 | in_gpu_proof | 2023-09-05 11:27:28.767585

1139 | in_gpu_proof | 2023-09-05 11:27:23.971785

扩展节点

zksync_local=# select l1_batch_number, status, created_at from prover_jobs order by created_at desc;

l1_batch_number | status | created_at

-----------------+--------------+----------------------------

1147 | in_gpu_proof | 2023-09-07 10:59:47.841166

1146 | in_gpu_proof | 2023-09-07 10:59:46.586542

1145 | in_gpu_proof | 2023-09-07 10:59:45.218178

1144 | in_gpu_proof | 2023-09-07 10:59:43.862459

1143 | in_gpu_proof | 2023-09-07 10:59:42.610455

1142 | in_gpu_proof | 2023-09-07 10:59:41.344974

1141 | in_gpu_proof | 2023-09-07 10:59:39.956309

1140 | in_gpu_proof | 2023-09-07 10:59:38.589833

1139 | in_gpu_proof | 2023-09-07 10:59:37.219

跟进代码

先确认下当前zkSync是否有自恢复的逻辑

- 查看数据操作core/lib/dal/src/prover_dal.rs,找到自恢复数据库操作requeue_stuck_jobs

- 找到具体执行服务模块housekeeper,并以默认加载

#[arg(

long,

default_value = "api,tree,eth,data_fetcher,state_keeper,witness_generator,housekeeper"

)]

components: ComponentsToRun,

2023-09-08T12:43:43.561336Z INFO zksync_core: Starting the components: [HttpApi, WsApi, ExplorerApi, Tree, EthWatcher, EthTxAggregator, EthTxManager, DataFetcher, StateKeeper, WitnessGenerator(None, BasicCircuits), WitnessGenerator(None, LeafAggregation), WitnessGenerator(None, NodeAggregation), WitnessGenerator(None, Scheduler), Housekeeper]

- housekeeper初始化

2023-09-08T12:43:47.244008Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: GcsBlobCleaner with frequency: 60000 ms

2023-09-08T12:43:47.244008Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: WitnessGeneratorStatsReporter with frequency: 10000 ms

2023-09-08T12:43:47.244016Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: GpuProverQueueMonitor with frequency: 10000 ms

2023-09-08T12:43:47.244037Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: ProverStatsReporter with frequency: 5000 ms

2023-09-08T12:43:47.244048Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: WaitingToQueuedWitnessJobMover with frequency: 30000 ms

2023-09-08T12:43:47.244050Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: ProverJobRetryManager with frequency: 300000 ms

2023-09-08T12:43:47.244030Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: L1BatchMetricsReporter with frequency: 10000 ms

2023-09-08T12:43:47.244171Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: FriProverJobRetryManager with frequency: 30000 ms

2023-09-08T12:43:47.244281Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: WaitingToQueuedFriWitnessJobMover with frequency: 40000 ms

2023-09-08T12:43:47.244293Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: SchedulerCircuitQueuer with frequency: 40000 ms

2023-09-08T12:43:47.244294Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: WitnessGeneratorStatsReporter with frequency: 10000 ms

2023-09-08T12:43:47.244300Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: FriWitnessGeneratorJobRetryManager with frequency: 30000 ms

2023-09-08T12:43:47.244296Z INFO zksync_core::house_keeper::periodic_job: Starting periodic job: FriProverStatsReporter with frequency: 30000 ms

此时zksync-server日志循环等待新证明

2023-09-08T12:43:48.092403Z INFO zksync_core::eth_sender::opside_send_plug::manage_proof_plug: submit_pending_proofs finish

2023-09-08T12:43:48.092417Z INFO zksync_core::eth_sender::opside_send_plug::manage_proof_plug: manage proof start try_fetch_proof_to_send

2023-09-08T12:43:48.092433Z INFO zksync_core::eth_sender::opside_send_plug::manage_proof_plug: manage proof: process_resend receiver for start signal

2023-09-08T12:43:48.445424Z INFO zksync_core::eth_sender::opside_send_plug::manage_proof_plug: try_fetch_proof_to_send: check dest block:681 commit

2023-09-08T12:44:08.927334Z INFO zksync_core::eth_sender::opside_send_plug::manage_proof_plug: sequence_batch_info.block_number == 0 at batch:681

- 判断housekeeper检查服务是否正常被执行

async fn run_routine_task(&mut self) {

let stuck_jobs = self

.prover_connection_pool

.access_storage()

.await

.prover_dal()

.requeue_stuck_jobs(self.processing_timeout, self.max_attempts)

.await;

let job_len = stuck_jobs.len();

for stuck_job in stuck_jobs {

vlog::info!("re-queuing prover job {:?}", stuck_job);

}

// vlog::info!("server.prover.requeued_jobs{}", job_len as u64);

// vlog::info!("server.prover.self.processing_timeout{}", self.processing_timeout.as_secs() as u64);

// vlog::info!("server.prover.self.max_attempts{}", self.max_attempts as u64);

metrics::counter!("server.prover.requeued_jobs", job_len as u64);

}

self.processing_timeout : 2700s (45分钟)

self.max_attempts: 1

经过测试,run_routine_task被正常定期执行,但是没有执行任务

2023-09-08T13:13:17.988849Z INFO zksync_core::house_keeper::prover_job_retry_manager: server.prover.requeued_jobs0

2023-09-08T13:13:17.988864Z INFO zksync_core::house_keeper::prover_job_retry_manager: server.prover.self.processing_timeout2700

2023-09-08T13:13:17.988873Z INFO zksync_core::house_keeper::prover_job_retry_manager: server.prover.self.max_attempts1

- 查看run_routine_task具体数据库操作

UPDATE prover_jobs

SET status = 'queued', attempts = attempts + 1, updated_at = now(), processing_started_at = now()

WHERE (status = 'in_progress' AND processing_started_at <= now() - $1::interval AND attempts < $2)

OR (status = 'in_gpu_proof' AND processing_started_at <= now() - $1::interval AND attempts < $2)

OR (status = 'failed' AND attempts < $2)

RETURNING id, status, attempts

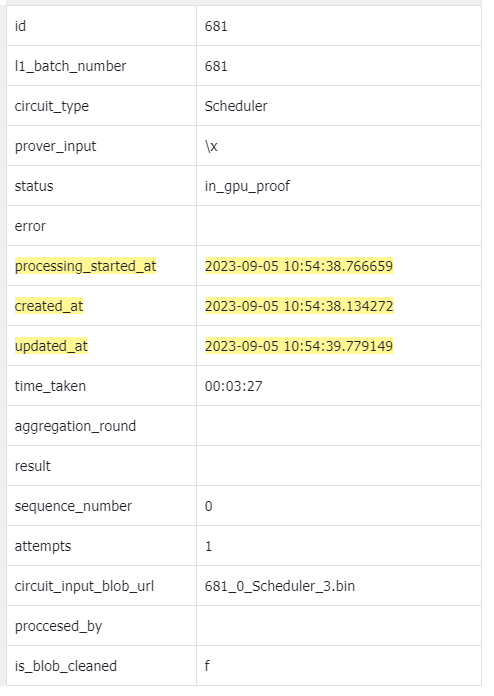

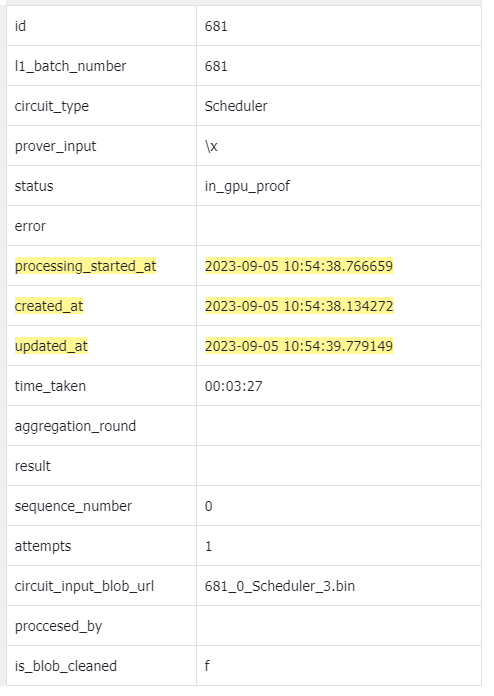

对照681数据

总结分析

对于zkSync本身存在自恢复逻辑housekeeper->run->run_routine_task->requeue_stuck_jobs

然后根据self.processing_timeout, self.max_attempts两个配置参数,进行数据库查询

self.processing_timeout : 2700s (45分钟)

self.max_attempts: 1

UPDATE prover_jobs

SET status = 'queued', attempts = attempts + 1, updated_at = now(), processing_started_at = now()

WHERE (status = 'in_progress' AND processing_started_at <= now() - $1::interval AND attempts < $2)

OR (status = 'in_gpu_proof' AND processing_started_at <= now() - $1::interval AND attempts < $2)

OR (status = 'failed' AND attempts < $2)

RETURNING id, status, attempts

初始任务状态为 queued,当任务成功下发到prover时,状态变更为in_gpu_proof,如果当前任务没有在设置时间内processing_timeout完成,则将当前状态改回 queued,重新下发prover(可能是其他prover)

注意:当状态变更为in_gpu_proof时,attempts 会加1

问题:由于默认max_attempts等于1,所以无法进行run_routine_task

解决:增大max_attempts,5

同时需要注意processing_timeout,应大于支持最低显卡类型,单显卡,运行的时间,否则可能导致算力浪费。

PROVER_NON_GPU_MAX_ATTEMPTS=5

PROVER_NON_GPU_GENERATION_TIMEOUT_IN_SECS=3600

方案验证

修改环境变量后

2023-09-08T14:00:44.990770Z INFO zksync_core::house_keeper::prover_job_retry_manager: re-queuing prover job StuckProverJobs { id: 1149, status: "queued", attempts: 2 }

- 相应in_gpu_proof任务状态更新为queued

- 链接的prover开始proof生成